Leading in the Age of AI: Power, Promise, and the Human 30%

By Lucy Petroucheva, Consultant at Dowling Street

When we sit down with leaders to discuss AI, two concerns tend to dominate.

The first is skepticism: AI is not all that powerful and the hype is overblown. It is sometimes useful, often inaccurate, and nothing close to intelligence. Leaders do not want to be naïve.

The second is fear: AI is dangerous and perhaps best avoided altogether. This view imagines AI exploiting data, corroding trust, pushing change faster than leaders can steer, and even threatening humanity itself.

Leaders rarely voice these concerns to their teams, where they feel pressure to project fluency and openness, but they acknowledge them in private. And both concerns contain truth. It is wise to doubt miracle-level claims about AI, and to scrutinize what we entrust to it.

However, taken as dominant stances, these perspectives are distractions. They slow leaders down, narrow their imagination, and prevent them from experimenting with what is possible.

We suggest two complementary stances instead:

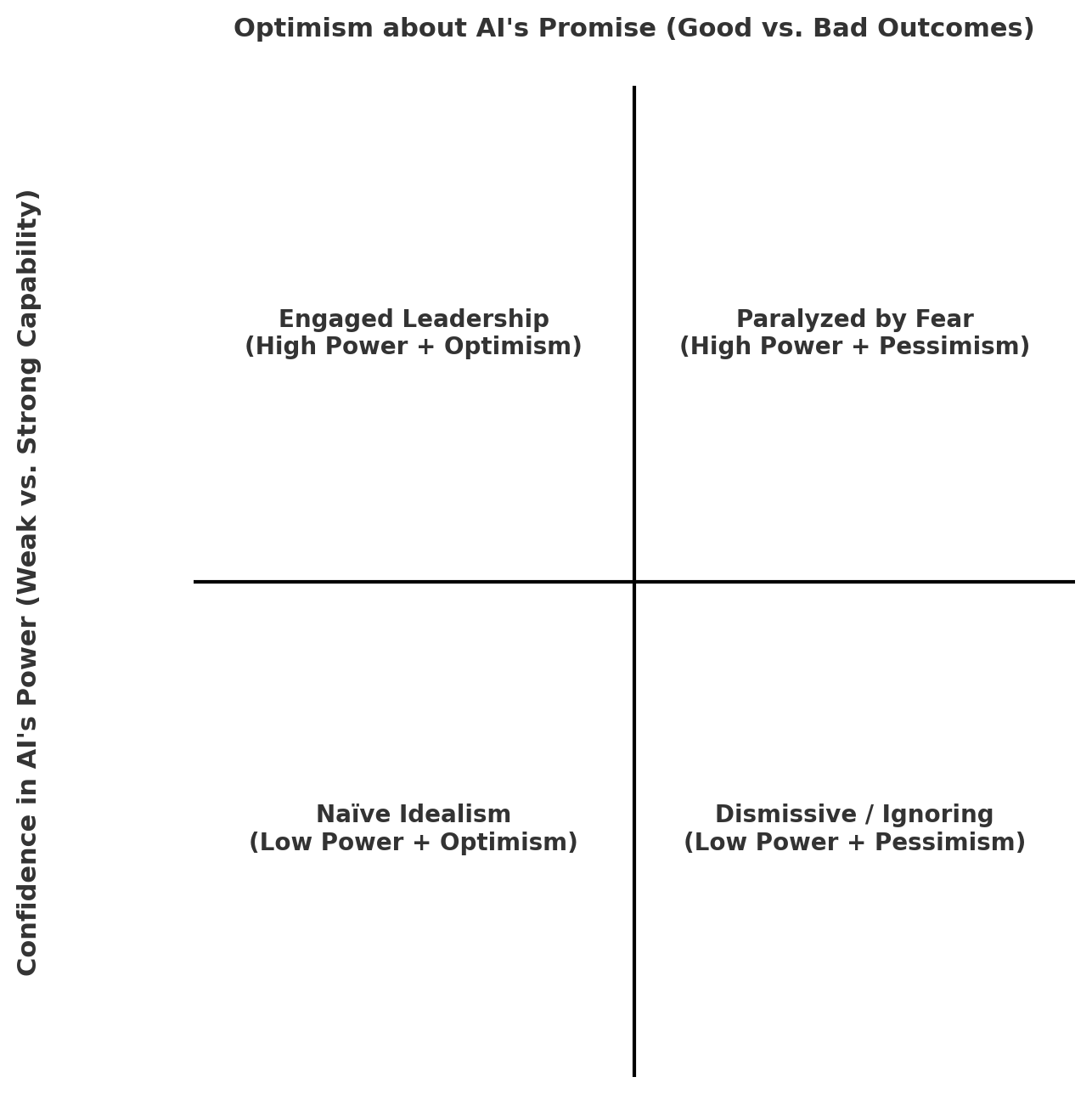

AI Promise vs. AI Power 2×2 Matrix (Credit: Lucy Petroucheva/Dowling Street)

Confidence in AI's Power: the recognition of AI's technical potential and performance—the belief that it can become an extraordinarily capable force in reasoning, strategizing, solving complex problems, and perhaps surpassing human ability in specific domains.

Optimism about AI's Promise: the attitude that AI can help build a better world.

A leader can hold one of these positions without the other. One may believe AI will become immensely powerful while fearing that society will fail to use that power wisely. Or one may hope that AI will help humanity while believing it will never be more than a sophisticated tool.

To lead well, however, both are necessary. Confidence in AI’s power without optimism about the direction of its outcomes leads to paralyzing fear. Optimism without confidence in its power leads to naïve idealism. Together, they create engagement: openness to opportunity without blindness to risk.

For business leaders, this combination matters because it shapes how they mobilize teams, allocate resources, experiment with strategy, and position their organizations for the future.

The Case for Confidence in AI's Power

Leaders often dismiss AI reflexively:

“It can’t do strategy.” Yet AI is already producing market analyses, strategic frameworks, and decision simulations.

“It can’t do relational work.” Yet people are turning to AI for coaching prompts, therapy-like dialogues, and mediation scripts. While these do not replace human presence, they provide meaningful support and relational guidance, like a book or framework that shifts one’s perspective on self and relationships.

“It’s just autocomplete.” At the core, yes. But when autocomplete operates at massive scale, improves every few months, and is embedded into your workflow, it begins to redefine how knowledge is created, shared, and applied.

History should caution us against declaring what AI will never do. Progress is nonlinear. Capabilities once thought decades away have appeared suddenly.

Even today, AI draws on a knowledge base far larger than any individual’s and is already embedded in search, productivity suites, code editors, and design tools. Its outputs are no longer isolated predictions—they are integrated into real workflows. As we continue granting AI deeper access to our data, richer context, and more integration with workflows, its role will expand. It will not merely ask sharper questions or scope issues more effectively; it will take on more of the decision-making at the project level, in many cases surpassing human judgment. We should be open to this possibility, not as inevitability or threat, but as a shift worth anticipating, shaping, and preparing for.

Confidence in AI's power doesn't require imagining sentient machines tomorrow; it requires acknowledging rapid advancement, compounding improvements, and the trajectory toward AI that can handle not just tasks, but entire projects and roles.

If leaders dismiss AI’s power, they stop experimenting. They forfeit the chance to reimagine workflows, prototype new offerings, and anticipate disruption in their industries. Remaining open and curious allows them to spot opportunities others overlook. It is like insisting electricity would never catch on and refusing to wire a building, only to watch the world light up while remaining in the dark.

The Case for Optimism about AI's Promise

It would be comforting to point to thoughtful AI ethics initiatives and argue that AI is being guided by people with the best intentions and a deep concern for human flourishing—that everything is safely on track. But the optimism we recommend rests on something simpler: recognizing that when attention is fixed only on what could go wrong, we lose the ability to imagine what could go right.

Nightmare scenarios—job losses, manipulative algorithms, systems indifferent to human survival—deserve our attention. But if the future is assumed to be doomed, why bother shaping it?

Optimism is not about denying risk. It is about deliberately focusing energy on what might be possible, useful, and worth building. As Noam Chomsky wrote: “Optimism is a strategy for making a better future. Because unless you believe the future can be better, it’s unlikely you will step up and take responsibility for making it so.”

Whether pessimists or optimists ultimately prove correct is unknown. What is clear is that the odds of a better future rise when optimists are present.

Optimism is strategic. It keeps teams and organizations motivated and innovative. Without it, leaders default to defense. With it, they mobilize toward opportunity.

The 70% Thought Experiment

Let’s ground this in a thought experiment.

Imagine that within a few years, AI can do 70% of your job—and do it well. Really imagine this.

Even today, we see hints of this. AI can already take many tasks about 70% of the way: summarizing an article, drafting a proposal, sorting data, editing a video, or generating an image. But it still cannot carry things across the finish line. That part is up to you:

Frame the problem (What are we even trying to do?)

Refine the output (Does this version fit the tone, the context, the audience?)

Make judgments (What matters most here? What are the tradeoffs?)

Ensure alignment (Is this consistent with our goals, values, strategy?)

In this mode, AI is a collaborator, powerful but partial, unable to fully grasp nuance, intent, or long-term implications. You remain the guide and responsible party.

Now extend this to the job level. If 70% of your role is automated, the nature of your work changes. Execution is no longer the centerpiece. Your job is no longer defined by tasks but by leadership qualities:

Orchestration: Aligning people, tools, and strategy.

Meaning-making: Translating work into purpose, and spotting dynamics that don’t fit clean models.

Trust-building: Building relationships, navigating conflict, and leading with integrity.

Ethical navigation: Weighing not just what works, but what is right and what serves the greater good.

Imagination: Envisioning new paradigms of work and culture.

This is not about layering AI onto existing work. It is about redefining what work itself looks like. Begin asking: What is my 30%? What uniquely human contribution do I—and my team—bring? How can we elevate it into our core value proposition?

When more of us see our work being automated, as well as our bosses’ work being automated, “moving up” will not be enough. The entire ladder is being reshaped. This is not just about career progression; it is about how organizations reinvent their value in a world where AI supports both execution and strategy.

For leaders, this means helping their people reframe their contribution, rethinking how their organizations create value, and guiding clients through the same uncertainty they face themselves.

These are fast becoming the defining features of leadership in an AI-saturated world. Those who thrive will not merely delegate the 70%; they will elevate the 30% for themselves, their teams, their organizations, and the communities they serve. The sooner leaders begin exploring that future contribution, the more space they will create to grow into it.

Leading Forward

If you dismiss AI as weak, you will ignore it. If you believe it is only dangerous, you will avoid it altogether. In either case, you relinquish the chance to shape how it transforms your work, your organization, and your future.

The leaders who thrive will not be those who guess correctly about AI's limits. They will be those who remain engaged—confident in its growing power, optimistic about its promise, and intentional about elevating the human 30%.

Do not cling to yesterday's version of your work; begin to imagine the contribution only you can make tomorrow. When confidence in AI's power is combined with optimism about its promise, leaders place themselves and the people they serve in the best position to create a meaningful and flourishing future.